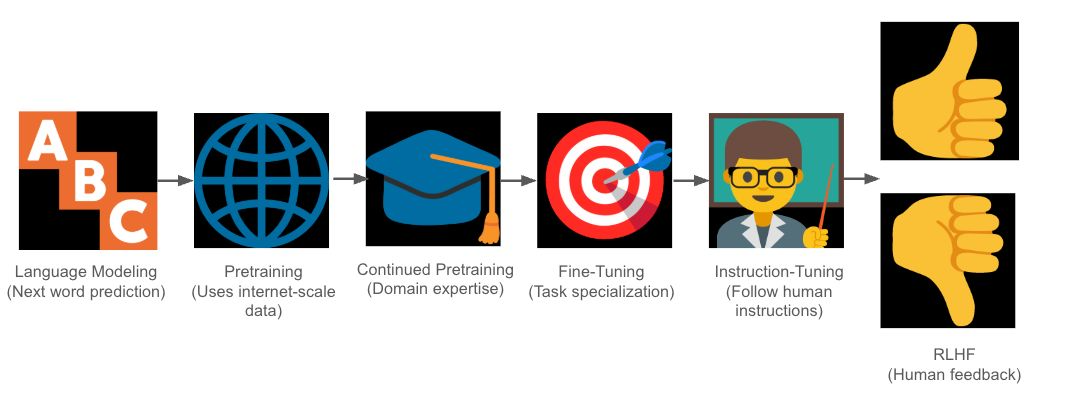

We will start by explaining the most basic building block of LLMS: language modeling, which is in the 1980s and 1990s, in the 1980s, and was later popular with the advent of neurological networks in the early 2010’s.

In its easiest form, language modeling is primarily about learning to predict the next word in a sentence. This task, known as the next word prophecy, is how the LLM language samples learn. The model fulfills it by estimating the potential division in relation to words, which can predict the possibility of any next word based on the context provided by previous words.

0 Comments